React is a popular JavaScript framework for developing dynamic, high-performing web interfaces that originated from Meta (previously known as Facebook). A single-page application (SPA) might not be search engine optimized. With React, developers can develop scalable and reusable components without fretting about inconsistent user interfaces.

‘’According to Stack Overflow survey in 2020, React is the most used web framework among programmers, with more than 60% claiming that they are currently using it or have used it previously.’’

It is necessary that websites deliver a consistent user experience and should also be visible to search engines. Otherwise, your valuable content will not be accessible to end users. This raises an important question: is React.js SEO friendly? Despite its development benefits, we must admit that React SEO presents difficulties.

SEO and Its Importance in React Development

Search engine optimization (SEO) refers to practices for optimizing websites for more exposure in search engine results pages. It allows you to optimize your website to get as high as possible with a particular search engine to give you even more exposure to your intended audience.

“Google data shows that the first page of search results earns 27.6% of clicks, whereas the second page gets just 0.63%. This huge difference drives businesses to strive for top ranking.’’

You should use a currently updated SEO tactic to generate clicks and expand your audience. One pillar of search engine optimization is the supporting technology stack you employ. In this context, SEO can have advantages and disadvantages if you are relying on React.

React lets you develop dynamic websites faster and in less time, but competing for the top of search engines can be an issue.

Is React Good for SEO?

Though React presents a great option for creating user-friendly web applications, the single-page application architecture could entail notable SEO issues. SSR (server-side rendering) is often seen as superior to CSR (client-side rendering) when it comes to search engine optimization (SEO). This is mainly because server-side rendered web pages are predominantly text-based and fast loading. Search engines, or bots, crawl websites in an attempt to discover new pages to index but often have difficulty fully rewarding sites that use a lot of JavaScript, such as React.

React sites may not be indexed correctly due to this; it could be emphasized this affects their SERP rankings.

This is not to say that React sites might not be search engine friendly at all. Developers can take a number of steps to help boost their websites for search engines.

How Do Google Crawlers Work?

Optimizing your React website for search engines requires understanding what search engine spiders do. Spiders, also known as crawlers, are automated programs that search websites for information and content-related links and keywords. Results generated by the spider are cached by search engine servers for indexing and ranking. SEO simplifies it for crawlers to find and index the information on your site, upgrading your ranking in search results.

How Google Indexes and Crawls Websites

This is a step-by-step process of how Google indexes and crawls web pages.

Crawling

Googlebot sends GET queries to a server for URLs in the crawl queue and records the response. Googlebot does this for HTML, JS, CSS, picture data, and other types of files.

Processing

At this stage Google adds URLs to the crawl queue that are found in HTML’s <a href> links. This step entails queuing any resource URLs (CSS/JS) contained within tags, as well as images kept within elements. Googlebot will cease processing a site (i.e., render it) if it discovers a noindex tag. Google’s indexer, Caffeine, won’t then scan the data.

Rendering

Googlebot uses a headless Chromium browser to run JavaScript code to find more data within the DOM but not in the HTML source. This applies to all HTML URLs.

Indexing

Caffeine analyzes Googlebot data, normalizes it (fixes broken HTML), and then attempts to make sense of it all, precalculating some ranking signals for use in a search result.

Serving and Ranking

The final phase in Google’s indexing process is serving and ranking; the other steps occur behind the scenes. Serving and ranking are the visually appealing components of SEO.

Many hundreds of variables, like the searcher’s location, language, and even the device they’re using, can all have an impact on the entire relevance of search queries.

But as a general guideline, content should adhere to E-E-A-T:

- Experience

- Expertise

- Authority

- Trustworthiness

If you successfully implement each of these variables, you will be able to strengthen your React JS SEO strategy and your web app’s search engine rankings.

Common Indexing Challenges in React SEO

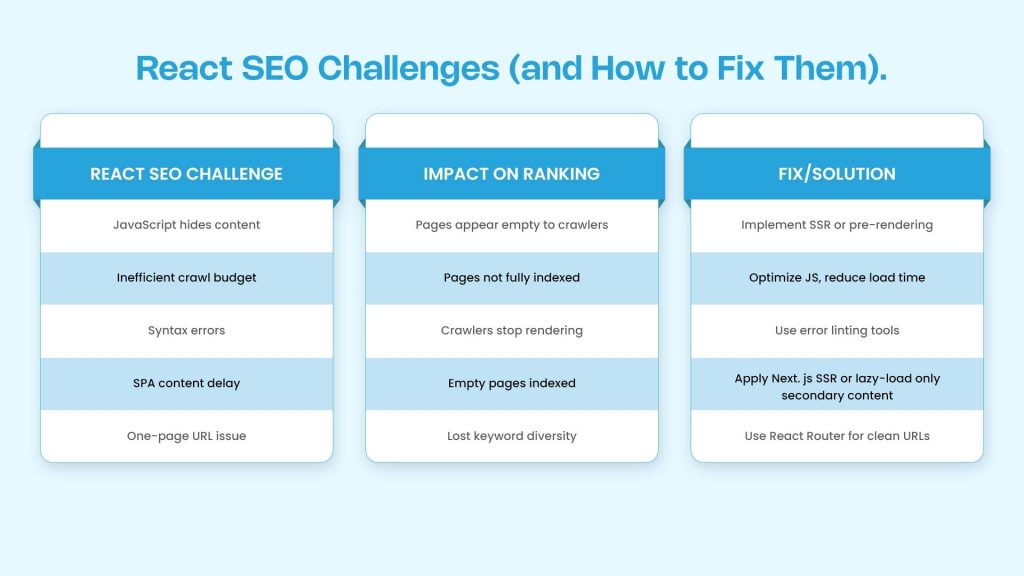

Most of Google’s indexing concerns with React software arise on all pages that contain a lot of JavaScript. Let’s review the most prevalent ones:

Javascript Obscures the Content

One of the critical mistakes that a React developer can make is unintentionally hiding content of a website from search engines. It is going to take crawlers much longer to discover and index the important data that is dynamically created. Many times, Javascript conceals key content. This occurs when you employ lazy loading or infinite scrolling, where new content loads only when the user scrolls down the page.

To check to see whether any of your content is hidden behind Javascript, try the following:

Load your website and note where the most important content is.

When you are finished browsing, disable Javascript. If you are using Chrome, go to Menu > Settings > Privacy & Security > Site Settings > Content > JavaScript and disable it.

Refresh your page to see the changes.

Browse again and search for any missing content. Verify that nothing important is missing, such as the primary body text that you want search engines to index.

Inefficient Use of Crawl Budget

The crawl budget refers to the maximum number of web pages that search engine spiders can crawl in a certain amount of time, which is typically five seconds per code.

On websites that depend on a lot of JavaScript, the crawl budget might be rapidly exhausted due to the previously identified lengthy script loading, processing, and execution times. In such cases, search engine crawlers abandon the site before it is fully indexed, creating significant challenges for React JS and SEO optimization.

Errors

When a JavaScript parser detects a single irregular character, we get a SyntaxError, which indicates we have stopped the evaluation on the current script. It’s a real pain when a typo or character breaks the entire script from working. If the Google bot sees an error indexing your page, it will treat it as if the page is empty, so it creates the index with no content.

Sitebulb states, “If we are uninformed of Google Javascript-specific rendering limitations, we may be missing some SEO efforts” (Sitebulb).

SPAs Indexing

React helps you to build fast, responsive, and and dynamic single-page applications (SPAs).These apps load a single page once and then load all remaining content based on user interaction.

However, SPAs have an SEO concern. SPAs can only display their entire content once everything has completed loading. The SEO rating of your website will suffer, and a large portion of the material will be hidden for crawling if Googlebot scans a page before the content is fully rendered.

How to Make Your JavaScript SEO-Friendly in React?

Next.js is a server-side application development framework that uses hot code reloading and automated code splitting. It helps developers build single-page apps that are optimized for search engines quickly and becomes extremely valuable to developers who want to support SEO in every way possible.

Server Side Rendering (SSR) is completely backed by Next.js. This means that HTML is generated for each request that is processed. In comparison to client-side rendering (CSR), server-side rendering (SSR) renders HTML from the server while simultaneously sending HTML and CSS files to the client’s browser. The client can submit a request or action to the server and then proceed. JS will render the HTML. The server will render that HTML each time the client requests it.

Just like with the client rendering use case, the developer will need to set up the Node.js server to route and process all requests simultaneously to implement SSR. Next.js provides SEO benefits out of the box, meaning that developers will be able to create fast, high-SEO-enabled websites.

Metadata, Tags & Headings Optimization

By default, the React application appears as a single URL with the metadata specified in the header. This default practice restricts various concepts from updating metadata, severely impacting SEO. Users may not be able to share individual pages and search bots may face issues indexing unique content. It leads to a default shared link with a proper keyword ranking for the homepage.

To overcome these limits and boost SEO, it is impossible to manage metadata, tags, and page titles using vanilla React. With the help of React Helmet, incorporating meta tags in specific components of a React web project can even be done dynamically.

Now you have React Helmet, a powerful tool that lets you add component-specific meta tags to your online application. All you have to do is add your meta tags to your component after installing React Helmet using npm. To better control how your site’s preview cards appear on social media, you may also add relevant Facebook and Twitter tags to your information.

Here are a few things to consider when adjusting your metadata and tags:

– For images, add appropriate alt tags

– Title tags should accurately represent the content of the page. Also, a title tag is under 70 characters in length, contains one primary keyword, and stands out against other clickable titles on SERPs.

– Use semantic HTML for the primary website. This means using article tags instead of just a standard div.

Pre-Rendering

Pre-rendering is the stage of rendering HTML versions of your site pages before serving them to users. As HTML includes all content, spiders can access all important information on your site.

Prerendering can be made easier using tools like Prerender.io and React Snapshot. Using the help of these technologies, you may make static HTML versions of your React website pages and let crawlers use them in place of generating the JavaScript content pages.

Prerendering can enhance your site’s indexing and ranking in search engine results pages, or SERPs, as crawlers can access all of your website’s content.

Slow Rendering and Content Delays

If you have a slow home internet connection, you will understand the feeling of waiting 5 additional seconds for a website to load. Five seconds does not sound long, but experts estimate if it takes longer than 3 seconds to load your website, then over half of mobile users abandon the site altogether. delayed content loading. lost your website traffic.

One of the biggest problems with React, specifically, is that it generally acts as a single-page application (SPA), which means everything is shown on a single page. Your page speed and SEO issue? Google crawlers have no time to wait.

If any part of your page loads slowly due to the JavaScript connection, your SEO will be poor or “empty” content as crawled.

Consider SSR combined with a framework like Next.js. SSR actually delivers fully rendered HTML from the server to the browser. When the browser connects, Google’s bots will see that content asynchronously and not have to wait for JavaScript to load.

Fixing URL Structures for Better React SEO

React creates the experience of being a one-page app with a single URL for the overall project. Because of this, your entire site is indexed with the initial homepage URL. This indicates that your site’s authority and trustworthiness are not accumulating over time through the SERPs.

In the end, you would have much more organic traffic and the opportunity to rank for many other keywords and topics if Google could actually see that your pages were different, even if you’ve been to every single one of your pages and they all feel the same to Google.

React Router is a library for managing the routing of a React app. React Router is an enhancing library for easier navigation between multiple web pages utilizing this JavaScript framework for routing. It handles routing components by rendering them in the different URLs and contexts of your website app.

Don’t Overlook the Essentials

While React applications require unique SEO considerations, this does not exclude other fundamentals from applying.

You’ll still have to ensure that your React applications follow best practices for:

- Semantic HTML

- Canonicalization

- Structured Data

- XML Sitemaps

- Mobile-First Website Framework.

- Https Title Tags

Final Verdict

While React is a popular framework for building web apps, its SPA structure can pose SEO challenges. SEMrush reported that JavaScript makes its way into 50% of the top 20 Google search results for popular keywords.

Although React is used to build web apps and is arguably the most widely used framework, its SPA base can cause search engine optimization problems. According to SEMrush, JavaScript appears in 50% of the top 20 Google search results for popular keywords. In spite of your reliance on JavaScript content, search engines may or may not be able to crawl it.

So, think again, because your confidence in getting ranked is likely to land you in trouble someday because you did not optimize your React website for the search engine crawler, so your visitor count would not increase or generate additional traffic.At OCloud Solutions, we specialize in addressing all of React SEO challenges. We fix performance issues and crawlability concerns to make your React apps search engine friendly.